Microsoft released a new Agent Framework (which is part of the Azure OpenAI .net sdk) finally giving us the opportunity to build stateful, tool-using AI agents, without having to write weird code, hack it or build your own memory system

What I will show you in this article:

- What an agent actually is

- How agent tools work

- How memory works and how agents build context

I'm going to build a very very simple example which is essentially a Travel Planning Agent with a Weather tool + User Memory

If you've only used raw chat completions before, this will feel like a game changer

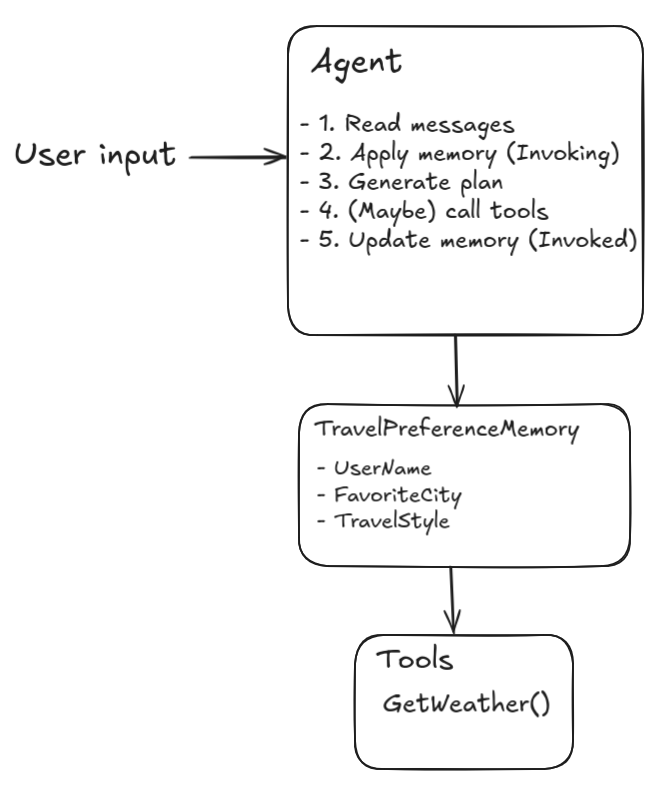

What is an Agent

An agent is not just "GPT with instructions"

An agent is:

a normal LLM call is stateless

An agent is stateful, persistent, and has certain capabilities

Essentially an agent can remember things across turns, decide when to call code (tools), add instructions dynamically at runtime, maintain persistent state between sessions and switch between "thinking", "tool-calling" and of course "responding"

Giving our Agent tools

A tool is nothing more than a normal c# method annotated with metadata, right. The agent can invoke it when it deems necessary

For example:

[Description("Get the weather for a given location")]

static string GetWeather([Description("The location to get the weather for")] string location)

{

Console.WriteLine($"[Tool invoked] GetWeather('{location}')");

return location switch

{

"Giannitsa" => "Sunny, 24°C",

"Athens" => "Partly cloudy, 30°C",

_ => "Clear skies, 20°C"

};

}

Register it:

var weatherTool = AIFunctionFactory.Create(GetWeather);

Add to the agent:

ChatOptions = new ChatOptions

{

Tools = [weatherTool]

}

Now the agent knows what the tool does, how and when to call it. Whenever the user asks: "What's the weather like in Athens?"... the agent triggers the tool instead of guessing

Giving our Agent a brain

Microsoft here gives us true structured memory using:

AIContextProvider + Your own type model + Automatic serialization

But how does it actually work?

Every time the agent runs, it executes two hooks:

InvokingAsync -> runs BEFORE the model generates output

InvokedAsync -> runs AFTER the model has generated output

And by using them we can extract user data from conversations, update our custom memory object, inject new dynamic instructions, persist state across turns and of course serialize/deserialize the memory object into the thread (What thread? You'll see shortly)

Creating the Agent

ChatClient chatClient = new AzureOpenAIClient(

new Uri(uri),

new AzureKeyCredential(key))

.GetChatClient("gpt-4o-mini");

var agent = chatClient.CreateAIAgent(new ChatClientAgentOptions

{

Instructions = """

You are a friendly travel assistant.

Always call the user by their name.

""",

Name = "TravelAgent",

AIContextProviderFactory = ctx => new TravelPreferencesMemory(

chatClient.AsIChatClient(),

ctx.SerializedState,

ctx.JsonSerializerOptions

),

ChatOptions = new ChatOptions

{

Tools = [weatherTool]

}

});

Creating a Thread

Thread = long-term conversation + serialized memory

var thread = agent.GetNewThread();

Essentially every agent.RunAsync(message, thread) call continues the same conversation, loads and updates memory

The Memory System

TravelPreferencesMemory holds all knowledge:

internal sealed class TravelPreferences

{

public string? UserName { get; set; }

public string? FavoriteCity { get; set; }

public string? TravelStyle { get; set; }

}

InvokedAsync

Runs after each agent action and its job is to mine the user messages

public override async ValueTask InvokedAsync(InvokedContext context, CancellationToken cancellationToken = default)

{

if (Preferences.FavoriteCity is not null && Preferences.TravelStyle is not null)

return;

if (!context.RequestMessages.Any(m => m.Role == ChatRole.User))

return;

var result = await _chatClient.GetResponseAsync<TravelPreferences>(

context.RequestMessages,

new ChatOptions

{

Instructions = "Extract the user's favorite city and preferred travel style ('adventure' or 'relaxed'). If not present, return nulls"

},

cancellationToken

);

Preferences.FavoriteCity ??= result.Result.FavoriteCity;

Preferences.TravelStyle ??= result.Result.TravelStyle;

}

InvokingAsync

Runs before the agent generates a reply. It injects dynamic instructions depending on what is known. For example:

If name is missing:

Ask the user for their name and decline to answer until they provide it

If travel is missing:

Ask the user whether they prefer adventure or relaxed travel style

This is, in my opinion, a game changer. I will show you why with an example. Let's say you didn't provide your name, the conversation with the agent would be the following:

Me: Hi there!!

Agent: Hello! May I know your name, please?

Me: I like to travel to Athens

Agent: Nice to meet you! What's your name?

Me: I love visiting my team, Kaizen Labs, for a relaxed trip

Agent: It's great to hear that you enjoy visiting Athens for a relaxed trip! I still need to know your name so I can address you properly. Could you please share it with me?

Me: What's the weather like in my favourite city today?

Agent: I would be happy to help with that! But first, could you please tell me your name?

As you can see, it won't use any tools or answer any of your questions without you providing your name. So, let's provide it :D

Me: Hi there!!

Agent: Hello! May I please know your name?

Me: My name is Elijah # <---- we provide the name

Agent: Nice to meet you, Elijah! Whats your favorite city to travel to?

Me: I like to travel to Athens

Agent: Athens is a fantastic choice, Elijah! Do you prefer an adventure travel style or a more relaxed travel experience?

Me: I love visiting my team, Kaizen Labs, for a relaxed trip

Agent: That sounds wonderful, Elijah! Visiting your team in Athens while enjoying a relaxed trip must be very rewarding. If you need any assistance with travel plans or activities in Athens, just let me know! By the way, would you like to know the current weather in Athens?

Me: Whats the weather like in my favourite city today?

[Tool invoked] GetWeather("Athens") # It used our tool!

Agent: The weather in Athens today is partly cloudy with a temperature of 30°C. It sounds like a lovely day to be out and about! If you need more information or help planning your activities, feel free to ask!

Full Architecture